Explore the visual and textual content within the Chronicling America digitized newspaper collection in new ways using machine learning!

Overview

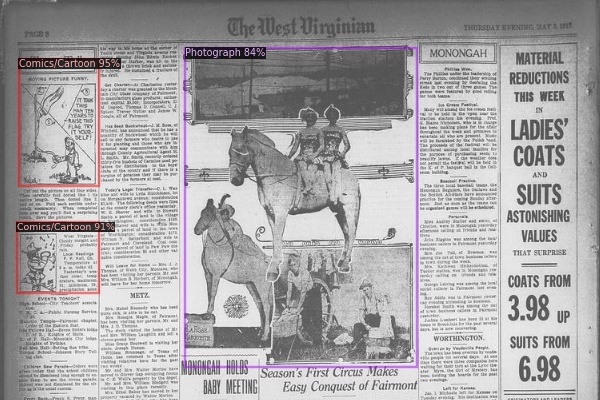

Welcome to the Newspaper Navigator dataset! This dataset consists of extracted visual content for 16,358,041 historic newspaper pages in Chronicling America. The visual content was identified using an object detection model trained on annotations of World War 1-era Chronicling America pages, including annotations made by volunteers as part of the Beyond Words crowdsourcing project. The resulting visual content recognition model detects the following types of visual content:

- Photograph

- Illustration

- Map

- Comics/Cartoon

- Editorial Cartoon

- Headline

- Advertisement

The dataset also includes text corresponding to the visual content, identified by extracting the Optical Character Recognition, or OCR, within each predicted bounding box. For example, if the visual content recognition model predicted a bounding box around a headline, the corresponding textual content provides a machine-readable version of the headline; likewise, for a photograph, illustration, or map, this textual representation often contains the title and caption.

Lastly, the Newspaper Navigator dataset includes image embeddings for extracted visual content with high predicted confidence scores, with the exception of headlines. The image embeddings are included to enable a range of search and recommendation tasks, as well as latent space visualizations.

You can find pre-packaged datasets for download below under "Pre-Packaged Datasets". If you're interested in computing against the entire dataset, see "AWS S3" below.

To learn more about the construction of the Newspaper Navigator dataset, please consult https://github.com/LibraryOfCongress/newspaper-navigator. There, you will find:

- All code written to construct the Newspaper Navigator dataset.

- A whitepaper containing a detailed description of the pipeline used to construct the dataset, including training the visual content recognition model, benchmarking its performance, and analyzing the dataset itself. The whitepaper is also available here.

- Demos, diagrams, and visualizations of the dataset and pipeline.

Pre-Packaged Datasets

To support users who would like to explore the visual content in the Newspaper Navigator dataset without having to query the dataset itself, we have created pre-packaged collections of visual content available in prepackaged/ - no coding necessary! For each year from 1850 through 1963, we compiled all of the extracted photographs, illustrations, maps, comics, editorial cartoons, headlines, and advertisements with confidence scores higher than 90% and packaged them as .zip files, along with the corresponding metadata (in JSON and CSV format). The file names are structured as prepackaged/[year]_[visual content type]. For example, to download all of the photos from 1905 in JPEG format, simply download prepackaged/1905_photos.zip; to grab the relevant metadata, download prepackaged/1905_photos.json or prepackaged/1905_photos.csv. The metadata includes the following fields:

- Filepath (of the original page relative to the Chronicling America batch file structure)

- Image URL

- Page URL

- Publication Date

- Page Sequence Number

- Edition Sequence Number

- Batch Name

- LCCN

- Bounding Box Coordinates

- Prediction Score

- OCR

- Place of Publication

- Geographic Coverage

- Newspaper Name

- Newspaper Publisher

Note that for headlines and ads, only the JSON and CSV files exist (the OCR for headlines was recorded, but the images of the headlines were not saved as JPEGs; furthermore, the ads are so numerous that full ZIP downloads are omitted for now). Below is a table showing all of the data for the visual content from 1905, relative to the filepath prepackaged/:

| Visual content type | ZIP w/ JPEGs | JSON file w/ metadata | CSV file w/ metadata |

|---|---|---|---|

| Photos | 1905_photos.zip | 1905_photos.json | 1905_photos.csv |

| Illustrations | 1905_illustrations.zip | 1905_illustrations.json | 1905_illustrations.csv |

| Maps | 1905_maps.zip | 1905_maps.json | 1905_maps.csv |

| Comics | 1905_comics.zip | 1905_comics.json | 1905_comics.csv |

| Editorial Cartoons | 1905_cartoons.zip | 1905_cartoons.json | 1905_cartoons.csv |

| Headlines | N/A | 1905_headlines.json | 1905_headlines.csv |

| Advertisements | N/A | 1905_ads.json | 1905_ads.csv |

Because the zipped folders can get quite large (and take a while to download), we have also pre-packaged "sample" datasets from each year, consisting of 1000 random images of each type (if there are fewer than 1000 for that type, the "sample" contains all of the corresponding files). For these sample datasets, we have also included the ResNet-18 and ResNet-50 embeddings in JSON format. They can be accessed by accessing “_embeddings.json”. Here are examples for the "sample" JSON files and "sample" embeddings JSON file (the corresponding ZIP and CSV files for the "sample" dataset can be found by replacing ".json" in the metadata filepath accordingly):

| Visual content type | JSON file w/ metadata | JSON file w/ embeddings |

|---|---|---|

| Photos | 1905_photos_sample.json | 1905_photos_sample_embeddings.json |

| Illustrations | 1905_illustrations_sample.json | 1905_illustrations_sample_embeddings.json |

| Maps | 1905_maps_sample.json | 1905_maps_sample_embeddings.json |

| Comics | 1905_comics_sample.json | 1905_comics_sample_embeddings.json |

| Editorial Cartoons | 1905_cartoons_sample.json | 1905_cartoons_sample_embeddings.json |

| Headlines | 1905_headlines_sample.json | N/A |

| Advertisements | 1905_ads_sample.json | 1905_ads_sample_embeddings.json |

More pre-packaged datasets will be available for download soon!

How the files are indexed

The structure of the Newspaper Navigator dataset mirrors the Chronicling America batch structure found here (for more information on the batches themselves, see here). In manifests/processed/, you will find text files containing the paths of the images included within the Newspaper Navigator dataset; an index of these text files can be found at: manifests/processed/processed_index.txt. For example, this index contains the path ak_albatross_ver01_processed_filepaths.txt, corresponding to the batch "ak_albatross_ver01". Let's now consider a JP2 path found in that file, such as: ak_albatross_ver01/data/sn84020657/0027952665A/1916030101/0007.jp2 (note: if “no/reel/” appears in the path, it should be replaced with “no_reel” when querying for the JP2). This path corresponds to the JP2 scan of the page relative to https://chroniclingamerica.loc.gov/data/batches/. Note that the LCCN is incorporated into the filepath (“sn84020657”), as well as the date of publication (“19160301”), edition sequence number (“01” after the date), and the page sequence number (“0007”). For each JP2, there is a corresponding JSON file in the Newspaper Navigator dataset with the visual content metadata (including page metadata, bounding box coordinates, predicted classes, confidence scores, and OCR), as well as an associated folder containing the extracted visual content in JPEG form and the image embeddings.

To access the corresponding JSON file in the Newspaper Navigator dataset, simply replace “.jp2” with “.json” index into https://news-navigator.labs.loc.gov/data/. For the previous example, this corresponds to: ak_albatross_ver01/data/sn84020657/0027952665A/1916030101/0007.json. There, you will find a JSON file corresponding to each page containing the following keys:

- filepath [str]: the path to the JP2 image of the newspaper page, assuming a starting point of https://chroniclingamerica.loc.gov/data/batches/

- batch [str]: the Chronicling America batch containing this newspaper page

- lccn [str]: the LCCN for the newspaper in which the page appears. The LCCN can be used to retrieve information about the newspaper at https://chroniclingamerica.loc.gov/lccn/[LCCN].json.

- pub_date [str]: the publication date of the page, in the format YYYY-MM-DD

- edition_seq_num [int]: the edition sequence number

- page_seq_num [int]: the page sequence number

- boxes [list:list]: a list containing the coordinates of predicted boxes indexed according to [x1, y1, x2, y2], where (x1, y1) is the top-left corner of the box relative to the standard image origin, and (x2, y2) is the bottom-right corner.

- scores [list:float]: a list containing the confidence score associated with each box

-

pred_classes [list:int]: a list containing the predicted class

for each box; the classes are:

- Photograph - 0

- Illustration - 1

- Map - 2

- Comics/Cartoon - 3

- Editorial Cartoon - 4

- Headline - 5

- Advertisement - 6

- ocr [list:str]: a list containing the OCR within each box

- visual_content_filepaths [list:str]: a list containing the filepath for all of the cropped visual content (except headlines, which were not cropped and saved).Note that the file name is formatted as [image_number]_[predicted class]_[confidence score (percent)].jpg so that important information about the image can be extracted directly from the path itself.

If you then access the corresponding folder by replacing “.json” with “/” in the path, you will find the cropped visual content in JPEG form; these paths match the paths included in the JSON file with the key “visual_content_filepaths”. Note that the headlines themselves are not stored in JPEG form. In this folder, you will also find a JSON file that contains the embeddings for all of the visual content (except headlines) with confidence scores greater than 50%. The embeddings file (“embeddings.json” relative to this path) is structured as follows:

- filepath [str]: the path to the JP2 image of the newspaper page, assuming a starting point of https://chroniclingamerica.loc.gov/data/batches/

- resnet_50_embeddings [list:list]: a list containing the 2,048-dimensional ResNet-50 embedding for each image (except headlines, for which embeddings were not generated)

- resnet_18_embeddings [list:list]: a list containing the 512-dimensional ResNet-50 embedding for each image (except headlines)

- visual_content_filepaths [list:str]: a list containing the file path for each cropped image (except headlines)

For an alternative view of the dataset, index/ contains text files listing all file paths associated with each batch (a list of all of the text files can be found in index/index.txt). Using the text file for a batch, it is possible to iterate through all of the paths of predicted visual content and find the predictions for a certain class, within a certain date range, or above a certain confidence score threshold simply by inspecting the paths themselves. However, the entire index is many gigabytes in size, and it is less memory-intensive to compute off of chronam_manifests/processed/ when searching over many batches at once. With this latter approach, the paths of processed images can be used to identify all pages within a certain date range; it is then possible to query for the corresponding predictions using the JSON files for these filtered pages only.

To access all of the JSON metadata info for a batch in one file, see batch_metadata/. Each file consists of a list of all JSON metadata for each page in the batch. For example, for "ak_albatross_ver01", see batch_metadata/ak_albatross_ver01_stats.json.

AWS S3

s3://news-navigator.labs.loc.gov-data/ is provided in order to enable researchers to perform large scale computing jobs on the dataset. Though the dataset is hosted free of charge via HTTPS requests, the S3 costs associated with retrieving and transferring data for these large-scale computing jobs will be charged to your AWS account. This decision was made to provide a fiscally sustainable version of the dataset for bulk use. In order to compute off of the S3 version of the dataset via the AWS CLI, you must set the flag “--request-payer requester” in order to activate “Requester-Pays”. Without this flag, the command will fail.

Demo

Soon to be included in the Newspaper Navigator GitHub repository is a Jupyter Notebook with a tutorial for downloading visual content based on parameters such as date of publication, content type, and confidence score. Other demos will be added over the coming weeks.

Model Weights

To download the PyTorch weights for the visual content recognition model, see model_weights/model_final.pth. For a description of how to use the visual content recognition model, see the Newspaper Navigator GitHub repository.

Rights and Reproductions

To learn more about rights and reproductions with Chronicling America, please refer to: https://chroniclingamerica.loc.gov/about/.

Citing the Dataset

To cite an image or pre-packaged dataset, please include the image URL, plus a citation to the website (such as "from the Library of Congress, Newspaper Navigator dataset: Extracted Visual Content from Chronicling America").

To cite the full Newspaper Navigator dataset and its construction, the whitepaper can be cited.